Suppose you are a researcher wanting to investigate some aspect of facial recognition or facial detection. One thing you are going to want is a variety of faces that you can use for your system. You could, perhaps, find and possibly pay hundreds of people to have their face enrolled in the system. Alternatively, you could look at some of the existing facial recognition and facial detection databases that fellow researchers and organizations have created in the past. Why reinvent the wheel if you do not have to!

- Ekman 60 Faces Test Software Download

- Ekman 60 Faces Test Software For Pc

- Ekman 60 Faces Test Software Pdf

- Ekman 60 Faces Test Software Developer

- Ekman 60 Faces Test Software Free

- Ekman 60 Faces Test Software For Windows

Here is a selection of facial recognition databases that are available on the internet.

There are different rules and requirements when it comes to the usage of each of these databases. In particular, most of these databases are only available for non-commercial research purposes. Make certain that you read the terms of use for any database you intend to use. In most cases they will be shown on, or linked to, the database's webpage. Many of these databases have specific requirements in regards to referencing.

- Through continued cross-cultural studies,. Dr. Ekman noticed that many of the apparent differences in facial expressions across cultures were due to context. To describe this phenomenon, Dr. Ekman coined the term display rules: rules we learn in the course of growing up about when, how, and to whom it is appropriate to show our emotional expressions.

- What are basic emotions In 1970 Paul Ekman described six universal or basic emotions. He did a lot of research. People told him what emotion they thought was displayed in a picture. Happy, Sad, Angry, Surprised, Scared, and Disgusted, as well as Neutral (no emotion) are the universal emotions he described.

1. 3D Mask Attack Database (3DMAD)

The 3D Mask Attack Database (3DMAD) is a biometric (face) spoofing database. It currently comprises 76500 frames covering 17 people. These were recorded using Kinect for both real access and spoofing attacks. Each frame consists of a depth image, the corresponding RGB image and manually annotated eye positions (with respect to the RGB image). The data was collected in 3 different sessions from the subjects. Five videos of 300 frames were captured for each session. The recordings were done under controlled conditions and depict frontal view and neutral expression.

Aug 31, 2020 Recognizing facial expressions is a sign of good emotional and mental health. The following quiz tests your abilities on cognitive recognition of faces. A score lower than 60% means that your mental health is not stable, and you need to get yourself checked by a psychologist.

2. 3D_RMA database

This database was created with a 3D acquisition system based on structured light. The facial surface was acquired as a set of 3D coordinates using a projector and a camera. 120 people were asked to pose twice in front of the system. For each session, three shots were recorded with different (but limited) orientations of the head: straight forward / left or right / upward or downward.

3. 10k US Adult Faces Database

This database contains 10,168 natural face photographs and several measures for 2,222 of the faces, including memorability scores and computer vision and psychology attributes. The face images are JPEGs with 72 pixels/in resolution and 256-pixel height. The attribute data are stored in either MATLAB or Excel files. The database includes a software tool that allows you to export custom image sets from the database for your research.

4. The AR Face Database, The Ohio State University

This face database was created by Aleix Martinez and Robert Benavente at the Computer Vision Center (CVC) at the University of Alabama at Birmingham (U.A.B.). It contains over 4,000 color images of 126 people's faces (70 men and 56 women). The images are frontal view faces depicting different facial expressions, illumination conditions, and add-ons like sunglasses and scarves. The pictures were taken under strictly controlled conditions. There were no restrictions placed on clothes, glasses, make-up or hair style.

5. AT & T - The Database of Faces

This database of faces contains a set of face images taken between April 1992 and April 1994 at the AT&T Laboratories, Cambridge. There are ten different images of each of 40 distinct subjects. Some images were taken at different times, varying the lighting, facial expressions (open/ closed eyes, smiling / not smiling) and facial details (glasses / no glasses). All the images were taken against a dark plain background. The subjects are in an upright, frontal position.

6. The Basel Face Model (BFM)

3D Morphable Face Models are the center of the research at the Computer Vision Group at the University of Basel. The Basel Face Model is made available on the website. The Morphable Model uses registered 3D scans of 100 male and 100 female faces.

7. BioID Face DB - HumanScan AG, Switzerland (no longer available)

The BioID Face Database dataset consists of 1521 gray level images with a resolution of 384x286 pixel. Each one shows the frontal view of a face of one out of 23 different test persons. For comparison purposes, the set also contains manually set eye positions.

8. Binghamton University Facial Expression Databases: BP4D-Spontanous Database, BU-3DFE Database (Static Data), BU-4DFE Database (Dynamic Data)

Researchers at Binghamton University have set up a number of databases that have been used for a number of 2D and 3D studies.

BU-3DFE (Binghamton University 3D Facial Expression) Database (Static Data) - to foster research in the fields of 3D face recognition and 3D face animation, Binghamton University created a 3D facial expression database (called BU-3DFE database), which includes 100 subjects with 2500 facial expression models. The database contains 100 subjects (56% female, 44% male), ranging age from 18 years to 70 years old. There is a variety of ethnic/racial ancestries, including White, Black, East-Asian, Middle-east Asian, Indian, and Hispanic Latino.

BU-4DFE (3D + time): A 3D Dynamic Facial Expression Database (Dynamic Data) - to analyze the facial behavior from a static 3D space to a dynamic 3D space, they extended the BU-3DFE to the BU-4DFE. They created a new high-resolution 3D dynamic facial expression database. They captured the 3D facial expressions at a video rate (25 frames per second). Six model sequences have been created for each subject showing six prototypic facial expressions (anger, disgust, happiness, fear, sadness, and surprise). Each expression sequence contains about 100 frames. The database includes 606 3D facial expression sequences captured from 101 subjects. There is a total of around 60,600 frame models. Each 3D model of a 3D video sequence has the resolution of around 35,000 vertices. The texture video has a resolution near 1040×1329 pixels per frame. The resulting database contains 58 female and 43 male subjects, with a variety of ethnic/racial ancestries, including Asian, Black, Hispanic/Latino, and White.

III. BP4D-Spontanous: Binghamton-Pittsburgh 3D Dynamic Spontaneous Facial Expression Database - There is a third 3D video database of spontaneous facial expressions in a diverse group of young adults. These show expressions of emotion and paralinguistic communication. The Facial Action Coding System was used in its creation. The database includes forty-one participants (23 women, 18 men). They were 18 – 29 years of age and were of a mix of races.

9. The Bosphorus Database

The Bosphorus Database is intended for those researching 3D and 2D human face processing tasks. These include expression recognition, facial action unit detection, facial action unit intensity estimation, face recognition under adverse conditions, deformable face modeling, and 3D face reconstruction. The database contains 105 subjects, with 4666 images of their faces. This database contains up to 35 expressions per subject, FACS scoring (includes intensity and asymmetry codes for each AU. One-third of the subjects are professional actors or actresses. There are systematic head poses (13 yaw and pitch rotations). There is a mix of face occlusions (beard & mustaches, hair, hand, eyeglasses).

10. Caltech Faces

Caltech is interested in the computational foundations of vision. They use this knowledge to design machine vision systems with applications to science, consumer products, entertainment, manufacturing, and defense. They study the human visual system using psychophysical experiments and building models of its function. They have built up databases of photos of different items. One collection contains 450 frontal face images of 27 people.

11. CAS-PEAL Face Database

The CAS-PEAL face database is a large-scale Chinese face database for training and evaluation that the Joint Research & Development Laboratory for Advanced Computer and Communication Technologies (JDL) of Chinese Academy of Sciences (CAS) constructed. The CAS-PEAL database contains 99,594 images of 1040 individuals (595 males and 445 females) displaying varying Pose, Expression, Accessory, and Lighting (PEAL).

12. ChokePoint

ChokePoint is a video dataset of 48 video sequences and 64,204 face images. The suggested uses of the dataset include person re-identification, image set matching, face quality measurement, face clustering, 3D face reconstruction, pedestrian/face tracking, and background estimation and substraction.

13. The CMU Multi-PIE Face Database

This database contains more than 750,000 images of 337 people. The subjects were photographed under 15 view points and 19 illumination conditions. They displayed a range of facial expressions. There are some high-resolution frontal images.

14. Cohn-Kanade AU Coded Facial Expression Database (no longer available)

The Cohn-Kanade AU-Coded Facial Expression Database can be used for research in automatic facial image analysis and synthesis, as well as for perceptual studies. There are two sets of photos. The first set contains 486 sequences from 97 posers, following Ekman's FACS model (and given an emotional label). The second set contains both posed and non-posed (spontaneous) expressions and additional types of metadata. These are also FACS-coded.

15. The Color FERET Database, USA

The DOD Counterdrug Technology Program sponsored the Facial Recognition Technology (FERET) program. This developed the FERET database, and a database of facial imagery was collected. The database is approximately 8.5 gigabytes.

16. The CVRL Biometrics Data Sets

The Computer Vision Research Laboratory at the Department of Computer Science and Engineering, University of Notre Dame, has collected a number of data sets and conducted baseline and advanced personal identification studies using biometric measurements. A number of the databases are available to groups of the public.

The Point and Shoot Face and Person Recognition Challenge (PaSC) - the goal of the Point and Shoot Face and Person Recognition Challenge (PaSC) was to assist the development of face and person recognition algorithms. The challenge focused on recognition from still images and videos captured with digital point-and-shoot cameras. The data from this challenge was collected at the University of Notre Dame.

FACE Features Set - this set contains feature patterns for imagery that are suitable for human-assisted face clustering. The features were computed for faces observed in blurry point-and-shoot videos. These include images of women seen before and after the application of makeup, and photographs of twins.

SN-Flip Crowd Video Database - SN-Flip captures variations in illumination, facial expression, scale, focus and pose for 190 subjects recorded in 28 crowd videos over a two-year period. The image quality is representative of typical web videos.

ND-2006 Dataset - this contains a total of 13,450 images showing six different types of expressions (Neutral, Happiness, Sadness, Surprise, Disgust, and Other). There are images of 888 distinct persons, with as many as 63 images per subject, in this data set.

3D Twins Expression Challenge ('3D TEC') Dataset - this data set contains 3D face scans for 107 pairs of twins. Each twin has a 3D face scan with a smiling expression and a scan with a neutral expression.

ND-TWINS-2009-2010 - this data set contains 24050 color photographs of the faces of 435 attendees at the Twins Days Festivals in Twinsburg, Ohio in 2009 and 2010.

Face Recognition Grand Challenge - the goal of the FRGC was to promote and advance face recognition technology, to support existing face recognition efforts of the U.S. Government. FRGC developed new face recognition techniques and systems. It was open to a wide variety of face recognition researchers and developers. It ran from May 2004 to March 2006. The FRGC Data Set contains 50,000 recordings. As these were used for experimentation, there is a wide variation between the images in the database. Some were recorded in a controlled setting, others in uncontrolled settings. There are quite strict controls on access to this database, so potential users will need to follow closely the website instructions.

17. Denver Intensity of Spontaneous Facial Action (DISFA) Database (no longer available)

DISFA is a non-posed facial expression database aimed at those who are interested in developing computer algorithms for automatic action unit detection. Their intensities are described by FACS. There are videos of 27 adult subjects (12 females and 15 males) with different ethnicities. Two FACS experts gave the video frames an intensity score of 0-5 AUs.

18. The EURECOM Kinect Face Dataset (EURECOM KFD)

This is a Kinect Face database of images of different facial expressions in different lighting and occlusion conditions. There are facial images of 52 people (14 females, 38 males) obtained by Kinect. The facial images of each person show nine states of different facial expressions.

19. The Extended M2VTS Database, University of Surrey

The XM2VTSDB multi-modal face database project contains four recordings of 295 subjects taken over a period of four months.Data can be obtained in the form of high-quality color images, 32 KHz 16-bit sound files, video sequences or as a 3d Model.

20. Face Recognition Data, University of Essex

A database provided to encourage comparative research, particularly for biometric processing comparisons and summary results. There are 7900 images of 395 individuals. These are of a mix of genders and races. The majority of the people represented are between 18-20 years old.

21. Face Video Database of the Max Planck Institute for Biological Cybernetics

This database contains videos of facial action units which were recorded at the MPI for Biological Cybernetics in the Face and Object Recognition Group, using the Videolab facilities of Mario Kleiner and Christian Wallraven. Facial movements were recorded from six different viewpoints at the same time while maintaining a very precise synchronization between the different cameras.

22. FaceScrub

This is a large dataset with over 100,000 face images featuring 530 people. The Vision and Interaction Group developed an approach to building face datasets that detected faces in images returned from searches for public figures on the Internet. Faces that did not belonging to each queried person were automatically discarded.

23. FEI Face Database

The FEI face database is a Brazilian face database that contains a set of face images at the Artificial Intelligence Laboratory of FEI in São Bernardo do Campo, São Paulo, Brazil. There are 2800 images, made up of 14 images for each of 200 individuals - 100 males and 100 female. Subjects are aged between 19 and 40.

24. FiA 'Face-in-Action' Dataset

The FiA dataset consists of 20-second videos of face data from 180 participants mimicking a passport checking scenario. The data was captured by six synchronized cameras from three different angles, with an 8-mm and 4-mm focal-length for each of these angles.

25. Georgia Tech Face Database

This database contains images of 50 people taken at the Center for Signal and Image Processing at Georgia Institute of Technology. Each person is represented by 15 color JPEG images with a cluttered background taken at resolution 640x480 pixels.

26. The Hong Kong Polytechnic University Biometric Research Center Databases

There are a number of biometric databases available at the Biometric Research Center website. Two of the databases relate to faces.

The Hong Kong Polytechnic University Hyperspectral Face Database (PolyU-HSFD) – this is designed to advance research and to provide researchers working in the area of face recognition with an opportunity to compare the effectiveness of face recognition algorithms. The dataset contains 300 hyperspectral image cubes from 25 volunteers with ages ranging from 21 to 33 (8 female and 17 male).

The Hong Kong Polytechnic University (PolyU) NIR Face Database is a large-scale NIR face database. It has collected NIR face images from 335 subjects. In total about 34,000 images were obtained.<p>

27. Image Analysis and Biometrics Databases @ IIIT-Delhi

IIITD have created a number of biometric databases, including six facial databases. These are:

IIIT-Delhi Disguise Version 1 Face Database - this dataset contains 681 images of 75 subjects with different kinds of disguise variations. Version 1 of the dataset consists of images captured in the visible spectrum. There is a further subset of this called the IIITD In and Beyond Visible Spectrum Disguise database, which includes both visible and thermal versions of the images.

IIIT-D Kinect RGB-D Face Database - this is a database containing 3D RGB-D images giving face recognition with texture and attribute features. The RGB-D images were captured using low-cost sensors such as Kinect.

WhoIsIt (WIT) Face Database - this gives a list of URLs, along with a tool to download the images that are present at these URLs.

Sketch Face Database (no longer available) - This is a database containing three types of sketches: viewed sketch, semi-forensic sketch, and forensic sketch database. The IIIT-D Viewed Sketch Database comprises 238 sketch-digital image pairs that were drawn by a professional sketch artist from digital images collected from different sources. The IIIT-Delhi Semi-forensic Sketch Database sketches were drawn based on the memory of sketch artists rather than the description of eye-witnesses. The Forensic Sketch Database forensic sketches are drawn by a sketch artist from the description of an eyewitness based on his or her recollection of the crime scene.

Look Alike Face Database - Look Alike face database consists of images relating to 50 well-known personalities (from western, eastern, and Asian origins) and their look-alikes.

Plastic Surgery Face Database - this contains 1800 pre and post surgery images pertaining to 900 subjects. There are 519 image pairs corresponding to local surgeries and 381 cases of global surgery (e.g., skin peeling and facelift).

28. Indian Movie Face database (IMFDB)

This dataset consists of 34512 images of 100 Indian actors collected from more than 100 videos. There is a detailed annotation of every image in terms of age, pose, gender, expression and type of occlusion.

29. Japanese Female Facial Expression (JAFFE) Database

This database contains 213 images of 7 facial expressions (6 basic facial expressions + 1 neutral) depicting 10 Japanese female models. Each image has been rated on 1 of 6 emotion adjectives.

30. Karolinska Directed Emotional Faces (KDEF)

This is a set of 4900 pictures of human facial expressions of emotion. The material was originally developed to be used for psychological and medical research purposes and is particularly suitable for perception, attention, emotion, memory and backward masking experiments.

31. Labeled Faces in the Wild

The Labeled Faces in the Wild collection contains 13,000 images of faces collected from the web, labeled with the person's name. There are a number of additional databases created using the photos from this collection.

The Face Detection Data Set and Benchmark (FDDB) is a data set of face regions designed for studying the problem of unconstrained face detection. It contains the annotations for 5171 faces in a set of 2845 images.

The Labeled Faces in the Wild-a (LFW-a) collection contains the same images available in the original Labeled Faces in the Wild data set. However, here they are provided after alignment using a commercial face alignment software.

The LFWcrop Database is a cropped version of the Labeled Faces in the Wild (LFW) dataset, keeping only the center portion of each image (i.e. the face). Most of the background is eliminated.

32.Labeled Wikipedia Faces (LWF)

The Labeled Wikipedia Faces (LWF) is a dataset of 8,500 faces for about 1,500 identities, taken from Wikipedia. The LWF facial images are aligned with faces in the Labeled Faces in the Wild database so face verification experiments can be performed and compared.

33. Long Distance Heterogeneous Face Database (no longer available)

This contains face images of 100 subjects (70 males and 30 females). There are both visible (VIS) and near-infrared (NIR) face images at distances of 60m, 100m, and 150m outdoors and a 1m distance indoors.

34. McGill Real-world (Unconstrained) Face Video Database

This database contains 18,000 video frames at 640x480 resolution from 60 video sequences. Each sequence was recorded showing a different subject (31 female and 29 male). The is a gender label for each image.

35. Meissner Caucasian and African American set

This set currently includes African American and Caucasian male faces in two poses (smiling with casual clothing and non-smiling with burgundy sweatshirt).

Ekman 60 Faces Test Software Download

36. MIT-CBCL Face Recognition Database

This database contains face images of 10 subjects. There are 200 images per subject, with varied illumination, pose, and background. There are also synthetic images (324 per subject) rendered from 3D head models of the ten models.

37. Makeup Datasets

Makeup Datasets is a series of datasets of female face images assembled for studying the impact of makeup on face recognition.

MIW (Makeup in the 'Wild') Dataset - There is one set of data, Makeup in the 'Wild' that contains face images of subjects with and without makeup that were obtained from the internet. There are 154 images of 125 subjects, 77 with makeup and 77 without makeup.

VMU (Virtual Makeup) Dataset - these are face images of Caucasian female subjects in the FRGC repository. They have been synthetically modified to simulate the application of makeup. There are 204 images of 51 subjects. Each subject has one image with no makeup, one with lipstick, one with eye makeup, and one showing a full makeover.

YMU (YouTube Makeup) Dataset - these are face images of subjects obtained from YouTube video makeup tutorials. There are 604 images of 151 subjects. Each subject has two images before and two images after makeup application.

38. MOBIO - Mobile Biometry Face and Speech Database

This database consists of bi-modal (audio and video) data taken from 152 people (100 males and 52 females). This data differs from the rest of those in this guide, in that it consists of people speaking, rather than still photos.

39. MORPH Database (Craniofacial Longitudinal Morphological Face Database)

This is the largest publicly available longitudinal face database. There are thousands of facial images of individuals collected in real-world conditions. Album 1 consists of a series digital scans of 515 photographs of a set of individuals taken at various times between October 26, 1962 and April 7, 1998. This shows their aging over the period. There is a more recent second album, which currently contains 55,134 images of 13,000 individuals collected over four years. Both albums include metadata for race, gender, date of birth, and date of acquisition.

40. Natural Visible and Infrared Facial Expression Database (USTC-NVIE)

This is a natural, visible and infrared facial expression database. It contains both spontaneous and posed expressions of more than 100 subjects, recorded simultaneously by both a visible and an infrared thermal camera. Illumination is provided from three different directions.

41. NIST Mugshot Identification Database

This database consists of three CD-ROMs of 3248 images of variable size using lossless compression. There are mugshots of 1573 individuals - 1495 male and 78 female. In most cases, there are both front and side (profile) views.

42. The OUI-Adience Face Image Project

This contains a data set and benchmark of face photos to assist with the study of age and gender recognition. The aim is for the data to be as realistic as possible to the challenges of real-world imaging conditions. It attempts to capture the variations in appearance, noise, pose, and lighting that can be expected from images taken without careful preparation or posing. The images included in the set come from Flickr albums, released by their authors to the general public under the Creative Commons (CC) license.

43. PhotoFace: Face recognition using photometric stereo

PhotoFace was a project undertaken at UWE Bristol. One of its aims was to capture a new 3D face database for testing within the project and for the benefit of the worldwide face recognition research community. This unique 3D face database contains 3187 sessions of 453 subjects, captured in two recording periods of approximately six months each.

44. Psychological Image Collection at Stirling (PICS)

This is a collection of images useful for conducting experiments in psychology, primarily faces. There are a number of sets of data.

Stirling/ESRC 3D Face Database - this is a collection of 3D face images, along with the tools to manipulate and display them. Currently, there are 45 male and 54 female sets available.

2D Face Sets - there are currently 10 sets of 2D faces: Aberdeen, Iranian women, Nottingham scans, Nott faces originals, Stirling faces, Pain expressions, Pain expression subset, Utrecht ECVP, Mooney_LR, and Mooney MF.

45. PubFig: Public Figures Face Database

This is a large, real-world face dataset consisting of 58,797 images of 200 people collected from the internet. These images are taken in completely uncontrolled situations without the cooperation of the subjects.

46. PUT Face Database

The PUT Face database includes 9971 images of 100 people. Their goal was to provide credible data for systematic performance evaluation of face localization, feature extraction and recognition algorithms. There are images for each person in five series:

neutral face expression with the head turning from left to right (approx. 30 images

neutral face expression with the head nodding from the raised to the lowered position (approx. 20 images)

neutral face expression with the raised head turning from left to right (approx. 20 images)

neutral face expression with the lowered head turning from left to right (approx. 20 images)

no constraints regarding the face pose or expression, some images with glasses (approx. 10 images).

47. Radboud face database

This is a set of pictures of 67 models (including Caucasian males and females, Caucasian children, both boys and girls, and Moroccan Dutch males) displaying 8 emotional expressions, based on the Facial Action Coding System.

48. SCface - Surveillance Cameras Face Database

SCface is a database containing static images of human faces, that were taken in an uncontrolled indoor environment. Five video surveillance cameras of various qualities were used to capture the images. The database contains 4160 static images (in both visible and infrared spectrums) of 130 subjects. The SCface database was created as a means of testing face recognition algorithms in real-world conditions.

49. SCfaceDB Landmarks

This uses the SCface database consisting of 21 facial landmarks (from the 4,160 face images representing 130 users), annotated manually by a human operator.

50.Senthilkumar Face Database Version 1

The Senthil face database contains 80 face images (the website contradicts itself as to whether they are color or black and white) of 5 men. There are frontal views of the faces with different facial expressions, occlusions and brightness conditions. There 16 images of each person.

51. The Sheffield (previously UMIST) Face Database

This database contains 564 images representing 20 individuals, who are of mixed race, gender and appearance). Each individual is depicted in a range of poses from frontal to profile views. Researchers can download either the full database or the pre-cropped database.

52. Siblings Database of the VG & V Group

The Siblings Database contains different datasets depicting images of individuals related by sibling relationships. It is split into two sets of photos: HQfaces and LQfaces.

HQfaces, contains high quality images (4256x2832) depicting 184 individuals (92 pairs of siblings). There are profile images for 79 pairs. 56 pairs of siblings are depicted with smiling frontal and profile pictures. The siblings are aged between 13 and 50, averaging 23.1. All subjects are Caucasian. 57 percent of them are male and 43% female. All the images are annotated with the position of 76 landmarks on the frontal images and 12 landmarks on the profile images.

LQfaces, contains 98 pairs of siblings (196 individuals) found over the Internet, with most of the subjects being celebrities. The photographs have different resolutions. The poses are semi-frontal. As these photographs were not taken for the purpose of facial recognition, they have a wide variety of resolutions, lighting, makeup etc. The individuals are 45.5% male and mainly Caucasian.

53. Social Perception Lab Database Sets, Department of Psychology, Princeton University

This is a series of 15 face databases. The first seven databases contain images of 25 distinct identities manipulated on different traits: attractiveness, competence, dominance, extroversion, likeability, threat, and trustworthiness, for both shape and reflectance. The next four databases consist of faces that have been manipulated on computer models developed in the lab on shape only. Two data sets consist of un-manipulated face images that have been rated on a number of trait dimensions (e.g. attractiveness, threat, trustworthiness). One database contains photos of politicians that have been judged on competence. The final database contains information on the videos used in a study on detecting preferences from facial expressions.

54. Texas 3D Face Recognition Database (Texas 3DFRD)

The Texas 3D Face Recognition database is a collection of 1149 pairs of facial color and range images of 105 adults. These images were acquired using a stereo imaging system at a very high spatial resolution of 0.32 mm along the x, y, and z dimensions. Information is provided giving the subjects’ gender, ethnicity, facial expression, and the locations of a large number of (25) anthropometric facial fiducial points.

55. The UCD Colour Face Image Database for Face Detection

This is a color face image database created for direct benchmarking of automatic face detection algorithms. The images were acquired from a wide variety of sources such as digital cameras, pictures scanned using photo-scanner, other face databases and the World Wide Web.

56. The University of Milano Bicocca 3D face database

The UMB-DB has a particular focus on facial occlusions, i.e.scarves, hats, hands, eyeglasses. There are 143 subjects, (98 male, 45 female). In total there are 1473 images, 590 of them have occlusions. The images depict 4 facial expressions: neutral, smiling, bored, hungry.

57. The University of Oulu Physics-Based Face Database

This database was collected at the Machine Vision and Media Processing Unit, University of Oulu. It contains color images of faces using different illuminants and camera calibration conditions as well as skin spectral reflectance measurements of each person. There are 125 different faces. Each person's image series contains 16 frontal views taken under different illuminant calibration conditions.

58. VADANA: Vims Appearance Dataset for facial ANAlysis

This dataset is designed to provide a wide number of high quality digital images for a limited number of subjects. The images provide a natural range of pose, expression and illumination variation. The images of particular subjects range over a long timeframe.

59. Yale Face Database

This database contains 165 grayscale images in GIF format of 15 individuals - 11 images per subject. They display the following facial expressions or configurations: center-light, w/glasses, happy, left-light, w/no glasses, normal, right-light, sad, sleepy, surprised, and wink.

60. YouTube Faces Database

This is a database of face videos designed for studying the problem of unconstrained face recognition in videos. It contains 3,425 videos of 1,595 different people. All the videos were downloaded from YouTube.

Cole Calistra

Cole is the CTO at Kairos, a Human Analytics startup that radically changes how companies understand people. He loves all things cloud and making great products come to life.

Cole Calistra

Cole is the CTO at Kairos, a Human Analytics startup that radically changes how companies understand people. He loves all things cloud and making great products come to life.

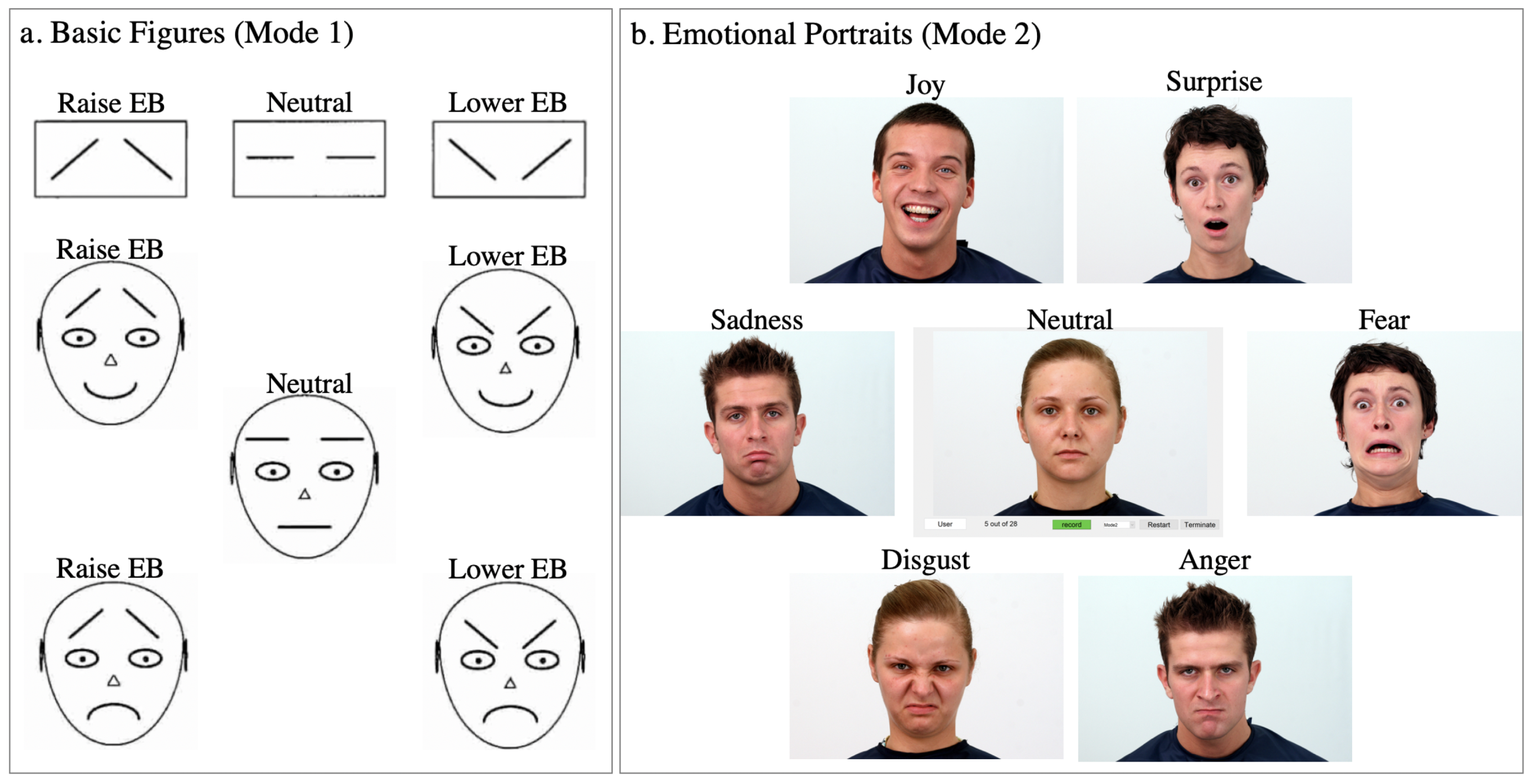

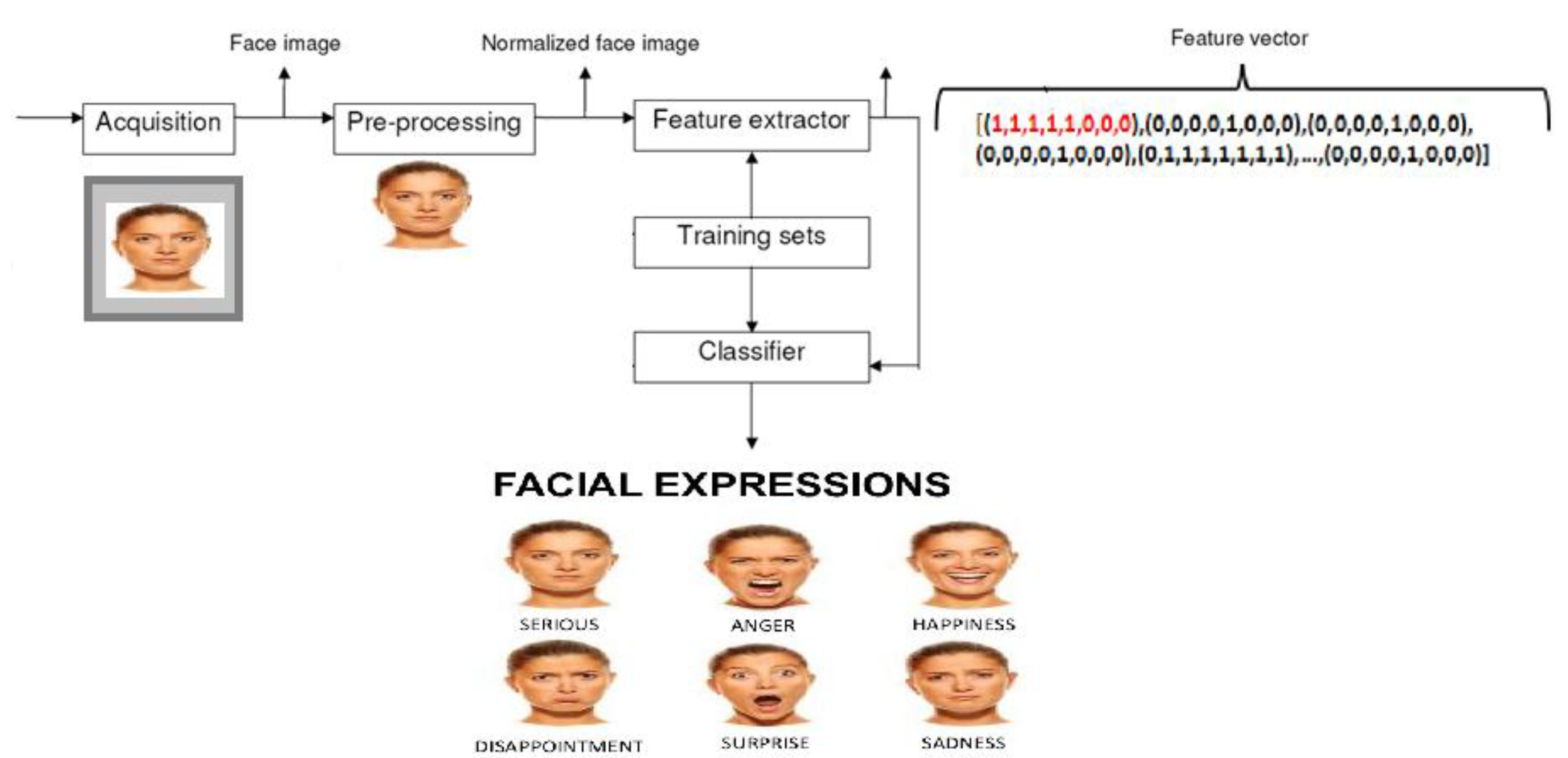

Facial Action Coding System (FACS) is a system to taxonomize human facial movements by their appearance on the face, based on a system originally developed by a Swedish anatomist named Carl-Herman Hjortsjö.[1] It was later adopted by Paul Ekman and Wallace V. Friesen, and published in 1978.[2] Ekman, Friesen, and Joseph C. Hager published a significant update to FACS in 2002.[3] Movements of individual facial muscles are encoded by FACS from slight different instant changes in facial appearance.[4] It is a common standard to systematically categorize the physical expression of emotions, and it has proven useful to psychologists and to animators. Due to subjectivity and time consumption issues, FACS has been established as a computed automated system that detects faces in videos, extracts the geometrical features of the faces, and then produces temporal profiles of each facial movement.[4]

Uses[edit]

Ekman 60 Faces Test Software For Pc

Using FACS [5] human coders can manually code nearly any anatomically possible facial expression, deconstructing it into the specific action units (AU) and their temporal segments that produced the expression. As AUs are independent of any interpretation, they can be used for any higher order decision making process including recognition of basic emotions, or pre-programmed commands for an ambient intelligent environment. The FACS Manual is over 500 pages in length and provides the AUs, as well as Ekman's interpretation of their meaning.

FACS defines AUs, which are a contraction or relaxation of one or more muscles. It also defines a number of Action Descriptors, which differ from AUs in that the authors of FACS have not specified the muscular basis for the action and have not distinguished specific behaviors as precisely as they have for the AUs.

For example, FACS can be used to distinguish two types of smiles as follows:[6]

- Insincere and voluntary Pan-Am smile: contraction of zygomatic major alone

- Sincere and involuntary Duchenne smile: contraction of zygomatic major and inferior part of orbicularis oculi.

Although the labeling of expressions currently requires trained experts, researchers have had some success in using computers to automatically identify FACS codes.[7]Computer graphical face models, such as CANDIDE or Artnatomy, allow expressions to be artificially posed by setting the desired action units.

The use of FACS has been proposed for use in the analysis of depression,[8] and the measurement of pain in patients unable to express themselves verbally.[9]

FACS is designed to be self-instructional. People can learn the technique from a number of sources including manuals and workshops,[10] and obtain certification through testing.[11] The original FACS has been modified to analyze facial movements in several non-human primates, namely chimpanzees,[12] rhesus macaques,[13] gibbons and siamangs,[14] and orangutans.[15] More recently, it was developed also for domestic species, including the dog,[16] the horse[17] and the cat.[18] Similarly to the human FACS, the animal FACS have manuals available online for each species with the respective certification tests.[19]

Thus, FACS can be used to compare facial repertoires across species due to its anatomical basis. A study conducted by Vick and others (2006) suggests that FACS can be modified by taking differences in underlying morphology into account. Such considerations enable a comparison of the homologous facial movements present in humans and chimpanzees, to show that the facial expressions of both species result from extremely notable appearance changes. The development of FACS tools for different species allows the objective and anatomical study of facial expressions in communicative and emotional contexts. Furthermore, a cross-species analysis of facial expressions can help to answer interesting questions, such as which emotions are uniquely human.[20]

EMFACS (Emotional Facial Action Coding System)[21] and FACSAID (Facial Action Coding System Affect Interpretation Dictionary)[22] consider only emotion-related facial actions. Examples of these are:

| Emotion | Action units |

|---|---|

| Happiness | 6+12 |

| Sadness | 1+4+15 |

| Surprise | 1+2+5B+26 |

| Fear | 1+2+4+5+7+20+26 |

| Anger | 4+5+7+23 |

| Disgust | 9+15+17 |

| Contempt | R12A+R14A |

Codes for action units[edit]

For clarification, FACS is an index of facial expressions, but does not actually provide any bio-mechanical information about the degree of muscle activation. Though muscle activation is not part of FACS, the main muscles involved in the facial expression have been added here for the benefit of the reader.

Action units (AUs) are the fundamental actions of individual muscles or groups of muscles.

Action descriptors (ADs) are unitary movements that may involve the actions of several muscle groups (e.g., a forward‐thrusting movement of the jaw). The muscular basis for these actions hasn't been specified and specific behaviors haven't been distinguished as precisely as for the AUs.

For most accurate annotation, FACS suggests agreement from at least two independent certified FACS encoders.

Intensity scoring[edit]

Intensities of FACS are annotated by appending letters A–E (for minimal-maximal intensity) to the action unit number (e.g. AU 1A is the weakest trace of AU 1 and AU 1E is the maximum intensity possible for the individual person).

Ekman 60 Faces Test Software Pdf

- A Trace

- B Slight

- C Marked or pronounced

- D Severe or extreme

- E Maximum

Other letter modifiers[edit]

There are other modifiers present in FACS codes for emotional expressions, such as 'R' which represents an action that occurs on the right side of the face and 'L' for actions which occur on the left. An action which is unilateral (occurs on only one side of the face) but has no specific side is indicated with a 'U' and an action which is unilateral but has a stronger side is indicated with an 'A.'

List of action units and action descriptors (with underlying facial muscles)[edit]

Main codes[edit]

| AU number | FACS name | Muscular basis |

|---|---|---|

| 0 | Neutral face | |

| 1 | Inner brow raiser | frontalis (pars medialis) |

| 2 | Outer brow raiser | frontalis (pars lateralis) |

| 4 | Brow lowerer | depressor glabellae, depressor supercilii, corrugator supercilii |

| 5 | Upper lid raiser | levator palpebrae superioris, superior tarsal muscle |

| 6 | Cheek raiser | orbicularis oculi (pars orbitalis) |

| 7 | Lid tightener | orbicularis oculi (pars palpebralis) |

| 8 | Lips toward each other | orbicularis oris |

| 9 | Nose wrinkler | levator labii superioris alaeque nasi |

| 10 | Upper lip raiser | levator labii superioris, caput infraorbitalis |

| 11 | Nasolabial deepener | zygomaticus minor |

| 12 | Lip corner puller | zygomaticus major |

| 13 | Sharp lip puller | levator anguli oris (also known as caninus) |

| 14 | Dimpler | buccinator |

| 15 | Lip corner depressor | depressor anguli oris (also known as triangularis) |

| 16 | Lower lip depressor | depressor labii inferioris |

| 17 | Chin raiser | mentalis |

| 18 | Lip pucker | incisivii labii superioris and incisivii labii inferioris |

| 19 | Tongue show | |

| 20 | Lip stretcher | risorius w/ platysma |

| 21 | Neck tightener | platysma |

| 22 | Lip funneler | orbicularis oris |

| 23 | Lip tightener | orbicularis oris |

| 24 | Lip pressor | orbicularis oris |

| 25 | Lips part | depressor labii inferioris, or relaxation of mentalis or orbicularis oris |

| 26 | Jaw drop | masseter; relaxed temporalis and internal pterygoid |

| 27 | Mouth stretch | pterygoids, digastric |

| 28 | Lip suck | orbicularis oris |

Head movement codes[edit]

| AU number | FACS name | Action |

|---|---|---|

| 51 | Head turn left | |

| 52 | Head turn right | |

| 53 | Head up | |

| 54 | Head down | |

| 55 | Head tilt left | |

| M55 | Head tilt left | The onset of the symmetrical 14 is immediately preceded or accompanied by a head tilt to the left. |

| 56 | Head tilt right | |

| M56 | Head tilt right | The onset of the symmetrical 14 is immediately preceded or accompanied by a head tilt to the right. |

| 57 | Head forward | |

| M57 | Head thrust forward | The onset of 17+24 is immediately preceded, accompanied, or followed by a head thrust forward. |

| 58 | Head back | |

| M59 | Head shake up and down | The onset of 17+24 is immediately preceded, accompanied, or followed by an up-down head shake (nod). |

| M60 | Head shake side to side | The onset of 17+24 is immediately preceded, accompanied, or followed by a side to side head shake. |

| M83 | Head upward and to the side | The onset of the symmetrical 14 is immediately preceded or accompanied by a movement of the head, upward and turned and/or tilted to either the left or right. |

Eye movement codes[edit]

| AU number | FACS name | Action |

|---|---|---|

| 61 | Eyes turn left | |

| M61 | Eyes left | The onset of the symmetrical 14 is immediately preceded or accompanied by eye movement to the left. |

| 62 | Eyes turn right | |

| M62 | Eyes right | The onset of the symmetrical 14 is immediately preceded or accompanied by eye movement to the right. |

| 63 | Eyes up | |

| 64 | Eyes down | |

| 65 | Walleye | |

| 66 | Cross-eye | |

| M68 | Upward rolling of eyes | The onset of the symmetrical 14 is immediately preceded or accompanied by an upward rolling of the eyes. |

| 69 | Eyes positioned to look at other person | The 4, 5, or 7, alone or in combination, occurs while the eye position is fixed on the other person in the conversation. |

| M69 | Head and/or eyes look at other person | The onset of the symmetrical 14 or AUs 4, 5, and 7, alone or in combination, is immediately preceded or accompanied by a movement of the eyes or of the head and eyes to look at the other person in the conversation. |

Visibility codes[edit]

| AU number | FACS name |

|---|---|

| 70 | Brows and forehead not visible |

| 71 | Eyes not visible |

| 72 | Lower face not visible |

| 73 | Entire face not visible |

| 74 | Unscorable |

Gross behavior codes[edit]

These codes are reserved for recording information about gross behaviors that may be relevant to the facial actions that are scored.

Ekman 60 Faces Test Software Developer

| AU number | FACS name | Muscular basis |

|---|---|---|

| 29 | Jaw thrust | |

| 30 | Jaw sideways | |

| 31 | Jaw clencher | masseter |

| 32 | [Lip] bite | |

| 33 | [Cheek] blow | |

| 34 | [Cheek] puff | |

| 35 | [Cheek] suck | |

| 36 | [Tongue] bulge | |

| 37 | Lip wipe | |

| 38 | Nostril dilator | nasalis (pars alaris) |

| 39 | Nostril compressor | nasalis (pars transversa) and depressor septi nasi |

| 40 | Sniff | |

| 41 | Lid droop | Levator palpebrae superioris (relaxation) |

| 42 | Slit | Orbicularis oculi muscle |

| 43 | Eyes closed | Relaxation of Levator palpebrae superioris |

| 44 | Squint | Corrugator supercilii and orbicularis oculi muscle |

| 45 | Blink | Relaxation of Levator palpebrae superioris; contraction of orbicularis oculi (pars palpebralis) |

| 46 | Wink | orbicularis oculi |

| 50 | Speech | |

| 80 | Swallow | |

| 81 | Chewing | |

| 82 | Shoulder shrug | |

| 84 | Head shake back and forth | |

| 85 | Head nod up and down | |

| 91 | Flash | |

| 92 | Partial flash | |

| 97* | Shiver/tremble | |

| 98* | Fast up-down look |

See also[edit]

Ekman 60 Faces Test Software Free

References[edit]

- ^Hjortsjö CH (1969). Man's face and mimic language. free download: Carl-Herman Hjortsjö, Man's face and mimic language'

- ^Ekman P, Friesen W (1978). Facial Action Coding System: A Technique for the Measurement of Facial Movement. Palo Alto: Consulting Psychologists Press.

- ^Ekman P, Friesen WV, Hager JC (2002). Facial Action Coding System: The Manual on CD ROM. Salt Lake City: A Human Face.

- ^ abHamm J, Kohler CG, Gur RC, Verma R (September 2011). 'Automated Facial Action Coding System for dynamic analysis of facial expressions in neuropsychiatric disorders'. Journal of Neuroscience Methods. 200 (2): 237–56. doi:10.1016/j.jneumeth.2011.06.023. PMC3402717. PMID21741407.

- ^Ramachandran VS (2012). 'Microexpression and macroexpression'. In Ramachandran VS (ed.). Encyclopedia of Human Behavior. 2. Oxford: Elsevier/Academic Press. pp. 173–183. ISBN978-0-12-375000-6.

- ^Del Giudice M, Colle L (May 2007). 'Differences between children and adults in the recognition of enjoyment smiles'. Developmental Psychology. 43 (3): 796–803. doi:10.1037/0012-1649.43.3.796. PMID17484588.

- ^Facial Action Coding System. Retrieved July 21, 2007.

- ^Reed LI, Sayette MA, Cohn JF (November 2007). 'Impact of depression on response to comedy: a dynamic facial coding analysis'. Journal of Abnormal Psychology. 116 (4): 804–9. CiteSeerX10.1.1.307.6950. doi:10.1037/0021-843X.116.4.804. PMID18020726.

- ^Lints-Martindale AC, Hadjistavropoulos T, Barber B, Gibson SJ (2007). 'A psychophysical investigation of the facial action coding system as an index of pain variability among older adults with and without Alzheimer's disease'. Pain Medicine. 8 (8): 678–89. doi:10.1111/j.1526-4637.2007.00358.x. PMID18028046.

- ^Rosenberg EL. 'Example and web site of one teaching professional'. Archived from the original on 2009-02-06. Retrieved 2009-02-04.

- ^'Facial Action Coding System'. Paul Ekman Group. Retrieved 2019-10-23.

- ^Parr LA, Waller BM, Vick SJ, Bard KA (February 2007). 'Classifying chimpanzee facial expressions using muscle action'. Emotion. 7 (1): 172–81. doi:10.1037/1528-3542.7.1.172. PMC2826116. PMID17352572.

- ^Parr LA, Waller BM, Burrows AM, Gothard KM, Vick SJ (December 2010). 'Brief communication: MaqFACS: A muscle-based facial movement coding system for the rhesus macaque'. American Journal of Physical Anthropology. 143 (4): 625–30. doi:10.1002/ajpa.21401. PMC2988871. PMID20872742.

- ^Waller BM, Lembeck M, Kuchenbuch P, Burrows AM, Liebal K (2012). 'GibbonFACS: A Muscle-Based Facial Movement Coding System for Hylobatids'. International Journal of Primatology. 33 (4): 809–821. doi:10.1007/s10764-012-9611-6.

- ^Caeiro CC, Waller BM, Zimmermann E, Burrows AM, Davila-Ross M (2012). 'OrangFACS: A Muscle-Based Facial Movement Coding System for Orangutans (Pongo spp.)'. International Journal of Primatology. 34: 115–129. doi:10.1007/s10764-012-9652-x.

- ^Waller BM, Peirce K, Caeiro CC, Scheider L, Burrows AM, McCune S, Kaminski J (2013). 'Paedomorphic facial expressions give dogs a selective advantage'. PLOS ONE. 8 (12): e82686. Bibcode:2013PLoSO...882686W. doi:10.1371/journal.pone.0082686. PMC3873274. PMID24386109.

- ^Wathan J, Burrows AM, Waller BM, McComb K (2015-08-05). 'EquiFACS: The Equine Facial Action Coding System'. PLOS ONE. 10 (8): e0131738. Bibcode:2015PLoSO..1031738W. doi:10.1371/journal.pone.0131738. PMC4526551. PMID26244573.

- ^Caeiro CC, Burrows AM, Waller BM (2017-04-01). 'Development and application of CatFACS: Are human cat adopters influenced by cat facial expressions?'(PDF). Applied Animal Behaviour Science. 189: 66–78. doi:10.1016/j.applanim.2017.01.005. ISSN0168-1591.

- ^'Home'. animalfacs.com. Retrieved 2019-10-23.

- ^Vick SJ, Waller BM, Parr LA, Smith Pasqualini MC, Bard KA (March 2007). 'A Cross-species Comparison of Facial Morphology and Movement in Humans and Chimpanzees Using the Facial Action Coding System (FACS)'. Journal of Nonverbal Behavior. 31 (1): 1–20. doi:10.1007/s10919-006-0017-z. PMC3008553. PMID21188285.

- ^Friesen W, Ekman P (1983), EMFACS-7: Emotional Facial Action Coding System. Unpublished manuscript, 2, University of California at San Francisco, p. 1

- ^'Facial Action Coding System Affect Interpretation Dictionary (FACSAID)'. Archived from the original on 2011-05-20. Retrieved 2011-02-23.

External links[edit]

Ekman 60 Faces Test Software For Windows

- download of Carl-Herman Hjortsjö, Man's face and mimic language' (the original Swedish title of the book is: 'Människans ansikte och mimiska språket'. The correct translation would be: 'Man's face and facial language')